The rapid advancement of Artificial Intelligence (AI) tools has ushered in a transformative era with profound implications for the global job market. With OpenAI introducing ChatGPT, it showcased a significant leap in natural language processing capabilities. It enabled AI to engage in conversations and generate coherent responses.

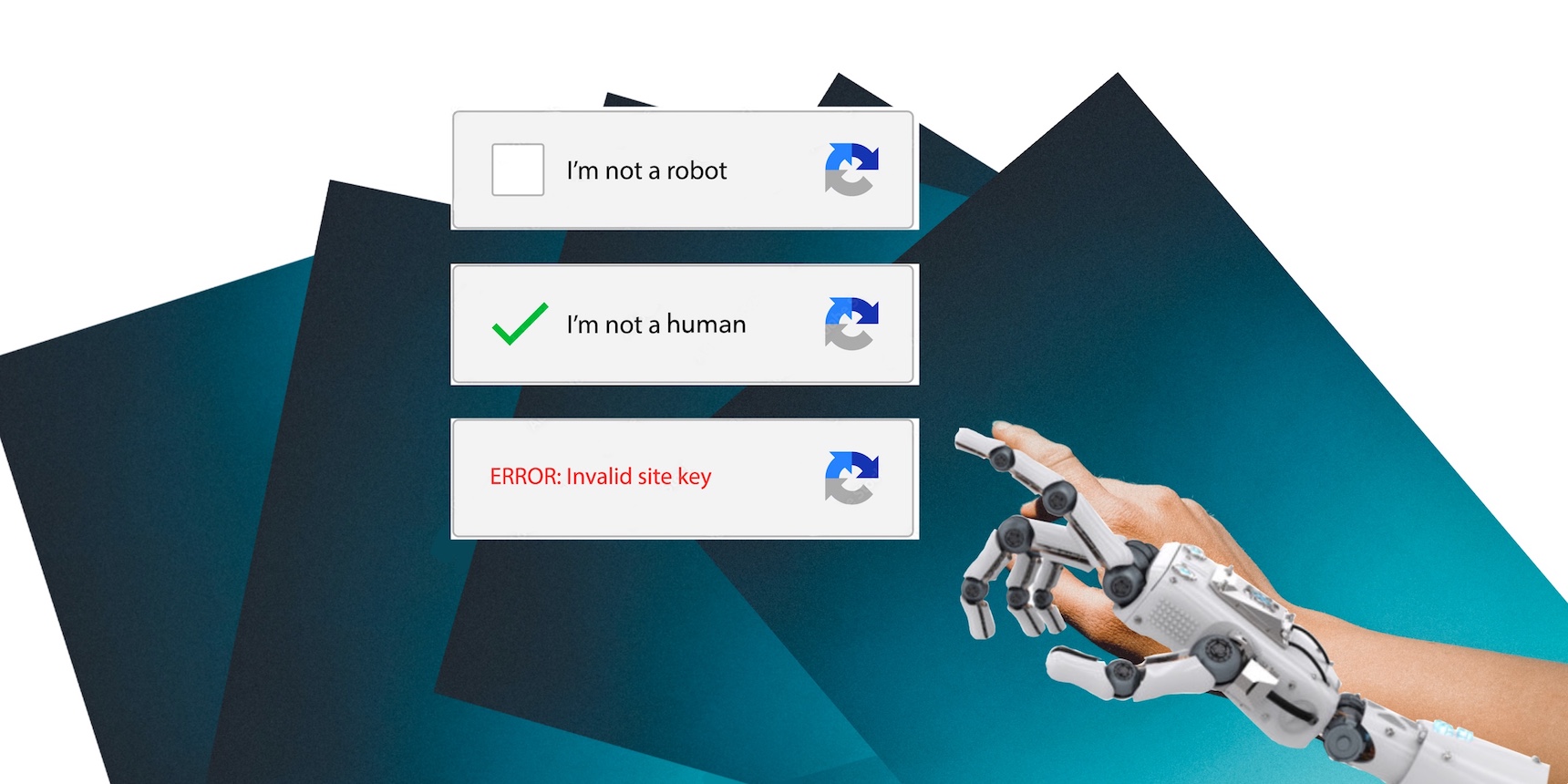

The emergence of sophisticated chatbots like ChatGPT marked a paradigm shift in how AI can be integrated across various industries and job roles. As businesses integrate AI into their operations, it will create new job opportunities as there will be a growing demand for AI specialists, data scientists, machine learning engineers, and other roles related to AI development, implementation, and management. However, while it presents exciting opportunities for automation and enhanced productivity, it also raises concerns about potentially displacing human workers.

Recognizing the need to regulate this transformative technology, the EU adopted its first-ever AI Act (AIA), touted as the world’s toughest regulation on AI. The journey toward the formulation of the AIA began in 2018 with the establishment of the European AI Alliance. The Commission took proactive steps by appointing 52 experts to the High Level Group on Artificial Intelligence, comprising representatives from academia, business, and civil society. Their primary goal was to support the implementation of the EU Communication on Artificial Intelligence.

Recently, the European Parliament took a significant step forward by adopting its negotiating position on the Artificial Intelligence Act. The vote count was 499 in favor, 28 against, and 93 abstentions, paving the way for discussions with EU member states to finalize the shape of the law. The EU aims to achieve the “Brussels effect,” a phenomenon where its regulations and standards set in Brussels have a far-reaching impact beyond the EU’s borders. By comprehensively addressing critical AI-related questions within its territory, the EU intends to influence global AI practices and promote responsible and human-centric AI worldwide. Moreover, the AIA has two primary objectives: ensuring the safe, ethical, and beneficial use of AI systems and creating a level playing field for businesses using AI in the EU.

Below are some key points of the AIA based on its draft version:

Risk-Based Classification

The AIA classifies AI systems into three categories: Unacceptable, High-risk, and Low-risk AI systems. Unacceptable AI systems, like those used for mass surveillance and predictive policing, are categorically banned. High-risk AI systems, including facial recognition and autonomous driving, are subjected to specific requirements like risk assessment, compliance statements, and self-certification. Low-risk AI systems, such as product recommendations, are generally unregulated but must be transparent and free from discriminatory practices.

Promoting Responsible AI

The AIA adopts a risk-based approach and prohibits AI systems that pose an unacceptable level of risk to safety, such as those involved in social scoring and discriminatory practices. Additionally, the Act bans “real-time” and “post” remote biometric identification systems in publicly accessible spaces, predictive policing systems, and emotion recognition systems in specific contexts. Untargeted scraping of facial images for facial recognition databases is also prohibited.

Obligations on Providers

The AIA imposes obligations on providers of foundation models, which necessitate risk assessments and registration in the EU database before release. Generative AI systems, like ChatGPT, must comply with transparency requirements by disclosing AI-generated content to differentiate deep-fakes from real content. Additionally, detailed summaries of copyrighted data used for training must be made publicly available.

Encouraging Innovation

To promote AI innovation, the AIA offers exemptions for research activities and AI components under open-source licenses. It also encourages the use of regulatory sandboxes, which allow businesses to test AI in real-life environments before deployment.

Strengthening Citizens’ Rights

The AIA is designed to enhance citizens’ rights by allowing complaints about AI systems and providing explanations for decisions impacting fundamental rights. The reformed EU AI Office will monitor the AI rulebook’s implementation, ensuring responsible AI practices and safeguarding citizens’ rights.

While the EU’s tough regulations aim to prioritize security, safety and promote human-centric AI practices, there are concerns that they may hinder AI development in the short term. The strict rules and compliance burdens may impose additional challenges on AI developers, diverting resources from research and development. The risk-based approach could also discourage high-risk AI applications, potentially limiting experimentation and innovation with transformative AI systems.

Despite the European Commission’s goal of having 75 percent of European businesses adopt AI by the end of the decade, the AIA’s provisions may discourage AI investments. According to a report by the Center for Data Innovation the AIA will cost the EU economy €31 billion over the next five years. Compliance costs for small or mid-sized enterprises deploying high-risk AI systems could amount to up to €400,000, potentially hampering AI investments by nearly 20 percent.

Here are some reasons why the regulations might slow down AI development:

Compliance Burden

The stringent regulations may impose additional burdens on AI developers and businesses. They may be required to conduct risk assessments, register AI models, and comply with transparency and disclosure requirements. Meeting these obligations can be time-consuming and resource-intensive, diverting resources away from research and development.

Innovation Barriers

The risk-based approach could discourage companies from developing high-risk AI applications due to the stringent obligations and potential legal consequences. This, in turn, could lead to a reluctance to invest in cutting-edge AI technologies and limit the scope of experimentation with potentially transformative AI systems.

Lengthy Approval Processes

Requiring the registration of AI models in the EU database prior to release could result in delays in bringing new AI products and services to the market. Lengthy approval processes may hinder the timely adoption of innovative AI solutions.

Global Competitiveness

Stringent regulations may put EU businesses at a competitive disadvantage compared to companies from regions with more relaxed AI regulations. To avoid compliance complexities, businesses may choose to deploy AI applications in less regulated markets which could impact the EU’s position in the global AI landscape.

However, it’s important to note that tough regulations aim to ensure that AI development aligns with European values, protects fundamental rights, and promotes responsible AI use. While they may create short-term challenges, in the long run, such regulations can contribute to building trust in AI technologies and fostering a more sustainable and ethical AI ecosystem.

Moreover, the EU’s emphasis on human-centric and trustworthy AI could drive innovation that prioritizes the well-being and safety of individuals. This, in turn, may lead to AI technologies that are more widely accepted and beneficial to society. To strike the right balance between regulation and innovation, policymakers and stakeholders must consider the potential trade-offs and continuously reassess and fine-tune the regulations to keep pace with the rapidly evolving AI landscape. Additionally, investing in research and development, promoting AI education and upskilling, and fostering collaboration between the public and private sectors can help mitigate potential slowdown and ensure Europe remains at the forefront of AI development.

In conclusion, the EU’s ambitious AI Act represents a significant step towards responsible AI development. By navigating the fine line between regulation and innovation, the EU can promote AI technologies that prioritize ethics, safety, and trust while maintaining its global competitiveness in the ever-evolving AI landscape.

Armenia’s Way Forward

Amid the global transformation driven by AI, Armenia finds itself at a pivotal moment. Balancing the benefits of AI innovation with potential challenges is crucial. With a growing tech sector and emphasis on technological progress, Armenia has the opportunity to position itself strategically in the ever-evolving AI landscape. However, specific actions are necessary to ensure responsible AI development and navigate the regulatory landscape.

Firstly, Armenia should assess its current AI landscape by identifying the sectors that use AI, potential risks, and ethical concerns. Drawing inspiration from the EU’s AI Act, Armenia should tailor regulations to its unique context. This involves fostering innovation while maintaining ethical practices. Armenia has the potential to craft its own path that aligns with its unique values and aspirations.

It would be prudent for Armenia to explore the possibility of adopting a framework similar to the EU’s AI Act as an integral part of its national security and cyber security doctrine. This would not only position Armenia as a responsible player in the global AI community but also reinforce its commitment to safeguarding its citizens’ well-being and upholding fundamental rights in the digital age.

By incorporating the principles of the EU’s AI Act into its strategic framework, Armenia can ensure that its development of AI is not only innovative, but also human-centric, secure, and ethically sound. Such an approach will not only enhance Armenia’s competitiveness in the international AI landscape, but also solidify its commitment to building a future where technology harmoniously coexists with society’s values and aspirations. Armenia stands at a crossroads, and the path it chooses will undoubtedly shape its role in the AI era and contribute to a safer and more prosperous digital future for its citizens.

Creative Tech

Fostering a SpaceTech Ecosystem in Armenia

As a small landlocked country with no access to the sea and limited territory, Armenia’s only salvation is to look to air and space, argue experts in the country’s space industry. “That’s where we are going to secure our future.”

Read moreAn AI Institute for Armenia

In AI research, large-scale computing resources are a key determinant of success. Armenia, facing this challenge, is responding with a strategic initiative: building an AI institute to foster both resource acquisition and training scientists to use large-scale computing resources.

Read moreThe Armenian Venture Capital Landscape and Beyond

Strategic measures are needed to further scale up the venture capital space in Armenia. This needs a favorable ecosystem and infrastructure. Nune Zadoyan explains.

Read moreEVN Disrupt

Podcast

Narek Aslikyan: Armenia’s Talent Infrastructure

In this episode, Narek Aslikyan, co-founder and CEO of Armenian Code Academy, provides insight into the Armenian tech industry's talent infrastructure. He explains how the Academy prepares individuals for employment in both technical and non-technical roles. We also discussed what is required to create employment opportunities outside Yerevan, and the strategies to encourage various stakeholders to expand into different regions of Armenia.

Read moreGevorg Karapetyan: The Last Mile of AI Product Deployment

Gevorg Karapetyan, the co-founder and CTO of Zero, joins us to discuss the development of generative AI solutions for augmenting knowledge workers. We explore the company’s platform, Hercules, which delivers generative AI solutions for enterprise customers and the productivity benefits it brings. Gevorg also shared his thoughts on the challenges and opportunities in building sustainable and defensible businesses in the era of foundational AI models, as well as the future of co-pilots for knowledge workers.

Read more